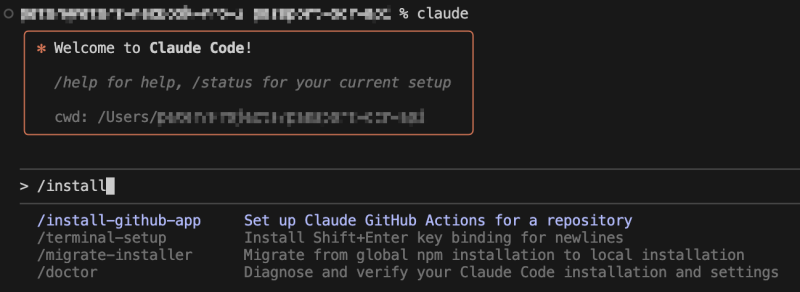

I previously experimented with Cursor’s BugBot as a code reviewer. However, with BugBot becoming paid at a price of $40 per month, I decided to give Claude Code a shot, as I'm already using it for coding as well. Setting up Claude Code as a reviewer on a GitHub repository is straightforward: open a Claude session in Visual Studio Code and run the command

/install-github-app

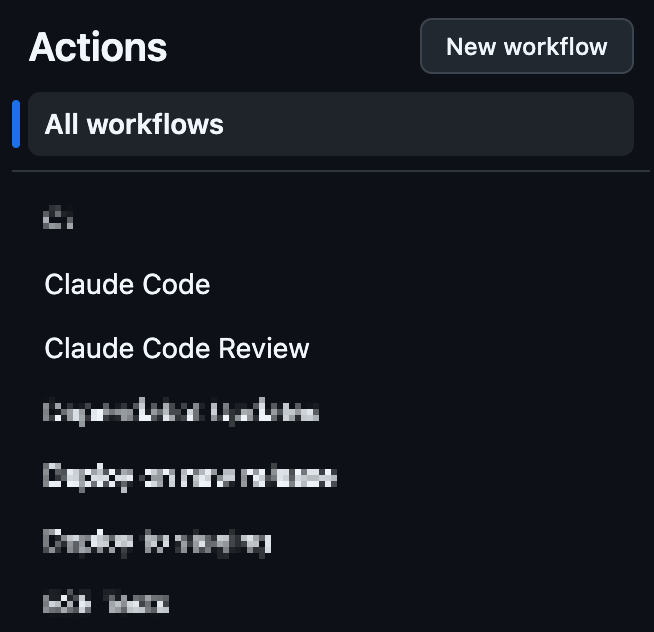

You’ll then be prompted to link Claude to your GitHub repository, which automatically installs a GitHub Action within it.

Configuring Claude as a reviewer

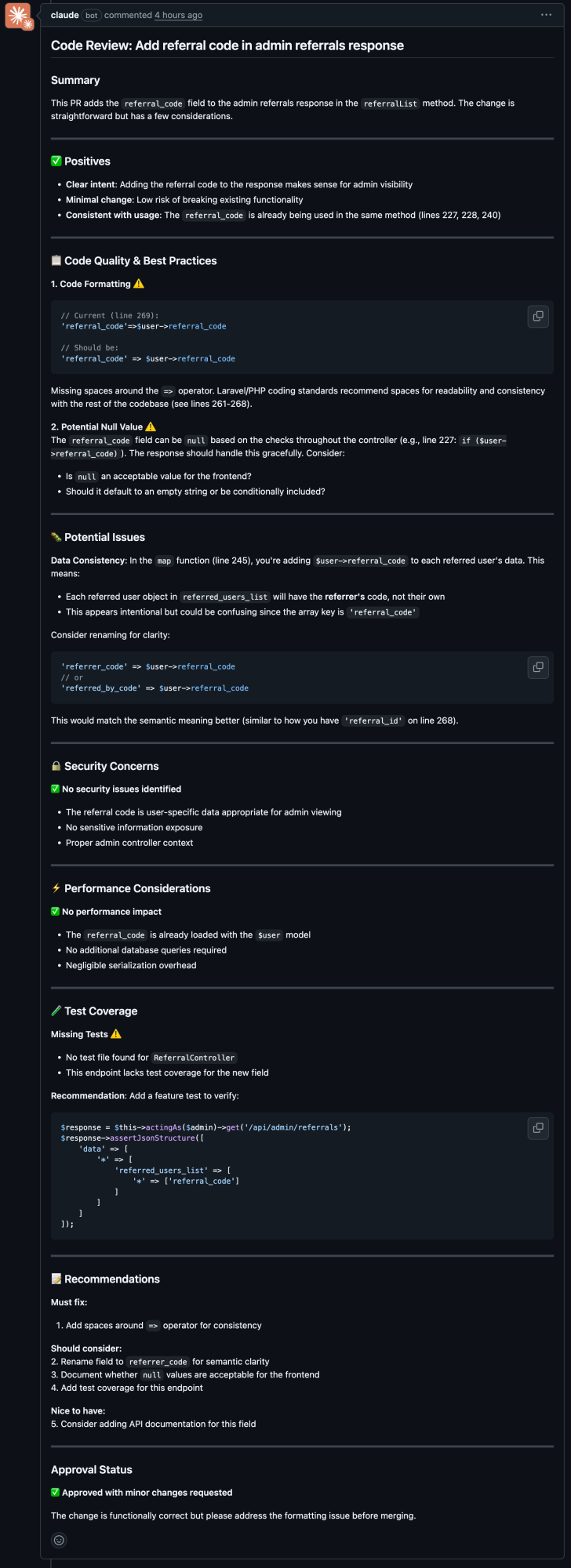

The action triggers automatically whenever a pull request is updated or new commits are pushed. The nice part about this setup is that the review prompt used by Claude is fully visible and customisable within the GitHub Action itself. Here’s the default prompt it uses:

Please review this pull request and provide feedback on:

- Code quality and best practices

- Potential bugs or issues

- Performance considerations

- Security concerns

- Test coverage

Use the repository's CLAUDE.md for guidance on style and conventions. Be constructive and helpful in your feedback.

Use `gh pr comment` with your Bash tool to leave your review as a comment on the PR.This produces the following comments on a pull request:

Every time the pull request changes, Claude reruns and posts another comment like this, quickly flooding the conversation with noise. My first tweak after installing Claude is to remove the "synchronise" event from the action trigger.

on:

pull_request:

types: [opened, synchronized]Adjusting the review behaviour

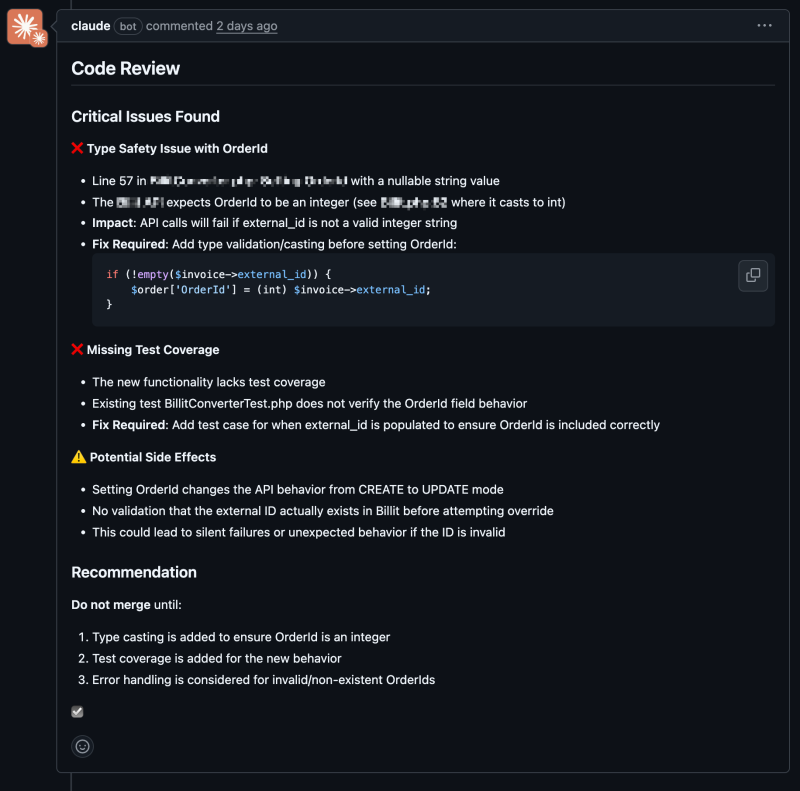

I prefer Claude to review each pull request only once. While there’s a case for having it analyse every commit, we found that approach overwhelming and noisy. On top of that, Claude’s default feedback tends to be overly picky, especially about null reference checks. I’d rather it focus on genuinely essential issues and keep its comments concise. Thankfully, it’s easy to adjust the review behaviour by tweaking the prompt. Here’s the version I settled on:

Please analyze the changes in this PR and focus on identifying critical issues related to:

- Potential bugs or issues

- Performance

- Security

- Correctness

If critical issues are found, list them in a few short bullet points. If no critical issues are found, provide a simple approval.

Sign off with a checkbox emoji: (approved) or (issues found).

Keep your response concise. Only highlight critical issues that must be addressed before merging. Skip detailed style or minor suggestions unless they impact performance, security, or correctness.

Use the repository's CLAUDE.md for guidance on style and conventions. Be constructive and helpful in your feedback.

Use `gh pr comment` with your Bash tool to leave your review as a comment on the PR.Now Claude’s comment becomes:

With this prompt, Claude focuses solely on critical issues and provides a concise summary of suggested changes. Combined with limiting it to a single review per pull request, it’s proven to be a valuable time-saver. I’ve found it especially effective in smaller teams, where it helps speed up approvals and catches bugs that might slip past human reviewers. Of course, as with any AI tool, human oversight remains essential. Each flagged issue still needs careful evaluation. After using this setup for about three months, Claude has already helped us catch and fix several bugs before they reached the QA stage.

Claude Code or Cursor's BugBot?

I prefer Claude's reviews over BugBot's. Like most AI reviewers, it still has a tendency to flag null reference checks, but when it comes to identifying actual bugs, the difference has been significant. If I can choose, I'm using Claude.

Cost

From a cost perspective, Anthropic’s offering is quite reasonable. A Claude Pro subscription costs $20 per month and includes the ability to use Claude as a code reviewer. While there are usage limits, our smaller development team hasn’t come close to reaching them in the three months we’ve been using it.

An interim CTO for your startup

We align product, process, and people so your team stops firefighting and starts scaling.

Member discussion