One of the many AI buzzwords in 2025 is "MCP server." An MCP server wraps the API of a specific tool and exposes it as a service that your LLM can interact with. There's plenty of information on the web if you want to know more. New MCP servers are appearing all the time, and Microsoft's Playwright MCP server got me thinking. Could it be leveraged by smaller teams to fill the testing gap?

Configuring an MCP

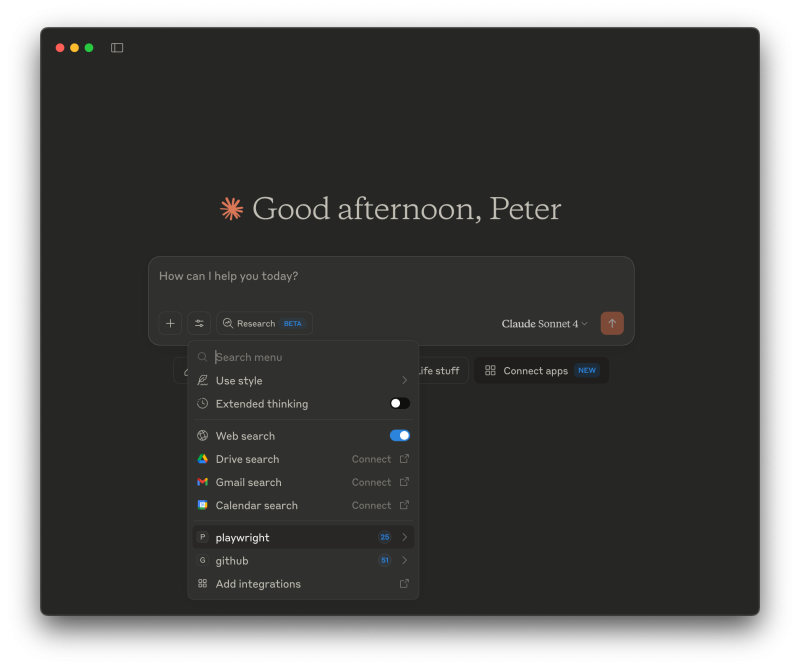

I'm using Claude, as it's the easiest way I've found to get started with MCPs in combination with an LLM. You can also use ChatGPT, but it requires a Pro subscription, which is more expensive.

To get started, open Claude's settings and paste the Playwright MCP server configuration into the claude_desktop_config.json file.

{

"mcpServers": {

"playwright": {

"command": "npx",

"args": [

"@playwright/mcp@latest"

]

}

}

}

It makes Playwright available in Claude Desktop. When you restart Claude, it should have access to Playwright functions.

Claude as tester

Off to a good start! Startups are always limited in resources, and more AI tools are emerging to help them bridge the gap with larger teams. As I often work with small startup teams, there’s usually no dedicated QA member. Quality assurance is often a responsibility of the entire team. Automated test suites help maintain product quality, but manual reviews are still common to ensure everything works as expected. This process is time-intensive, and I wanted to use Playwright to ease the manual testing burden on the team.

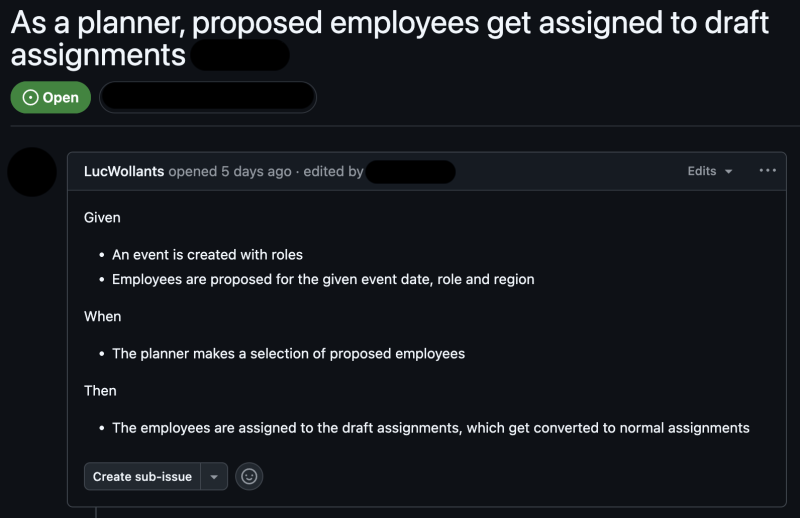

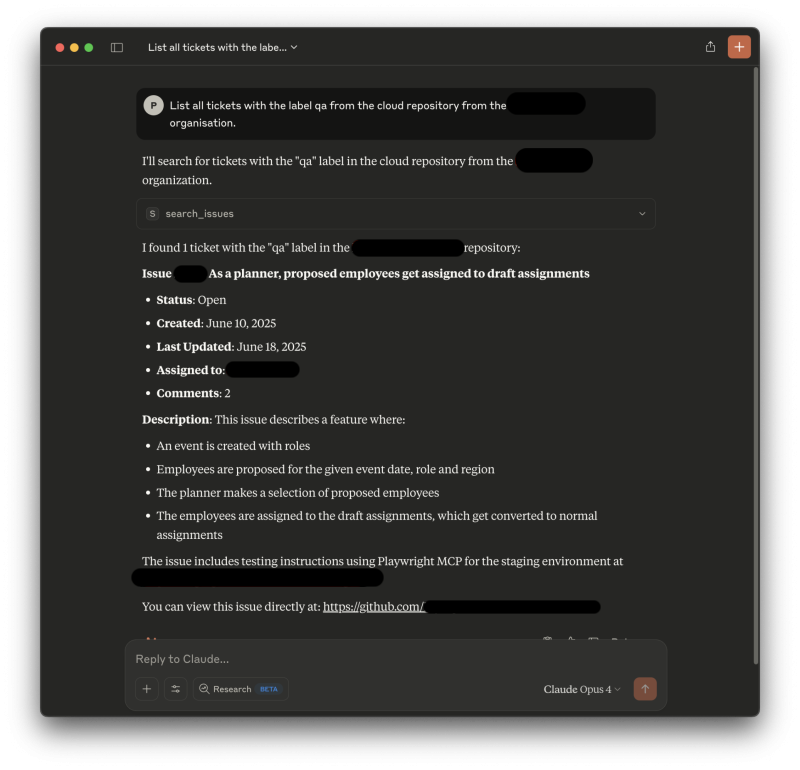

The ticket above is from the team’s Kanban board and is part of a new feature currently in development. Based on the ticket, I gave Claude the following prompt:

Use playwright MCP, go to <testing environment URL> log in with username <user> and password <password, create a new event with the role <Role>. You should only add one role, do not press the “Add” button to add a second role. If you did, delete the second role. Once the event is created, add a proposed employee to an empty assignment.

What’s important to note is that I put minimal effort into crafting the prompt. The more effort required to write the prompt, the less it makes sense for Claude to handle the task on our behalf. After feeding the prompt to Claude, it began working immediately. It launched Chromium and used Playwright to navigate the application. While creating an event, it encountered several mandatory fields, which it interpreted and filled out one by one until it completed the form. Notice that I didn’t give Claude any instructions on how to fill out these fields. After creating the event, it continued until it was confident that the task was complete.

Usability testing as bonus

Interestingly, it also exposed a potential UX flaw. In the first version of my prompt, I didn't include the following sentence:

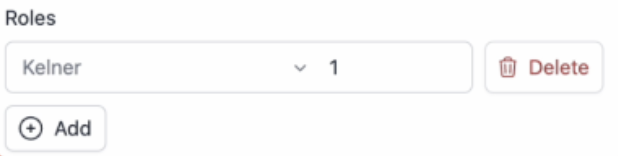

You should only add one role, do not press the “Add” button to add a second role.

And with that first prompt, Claude got stuck on the following component while trying to create an event.

The user is required to fill in at least one role, and the UI provides a row ready for completion for that purpose. Claude misunderstood this and tried to add a role by clicking the Add button, which inserted a second row. Since it didn't complete the second row, it got stuck. I can easily imagine real users running into the same issue. It's not the most intuitive interface. While running the test, Claude clicking through the application was similar to a user going through it. Usability testing came as a free side-effect.

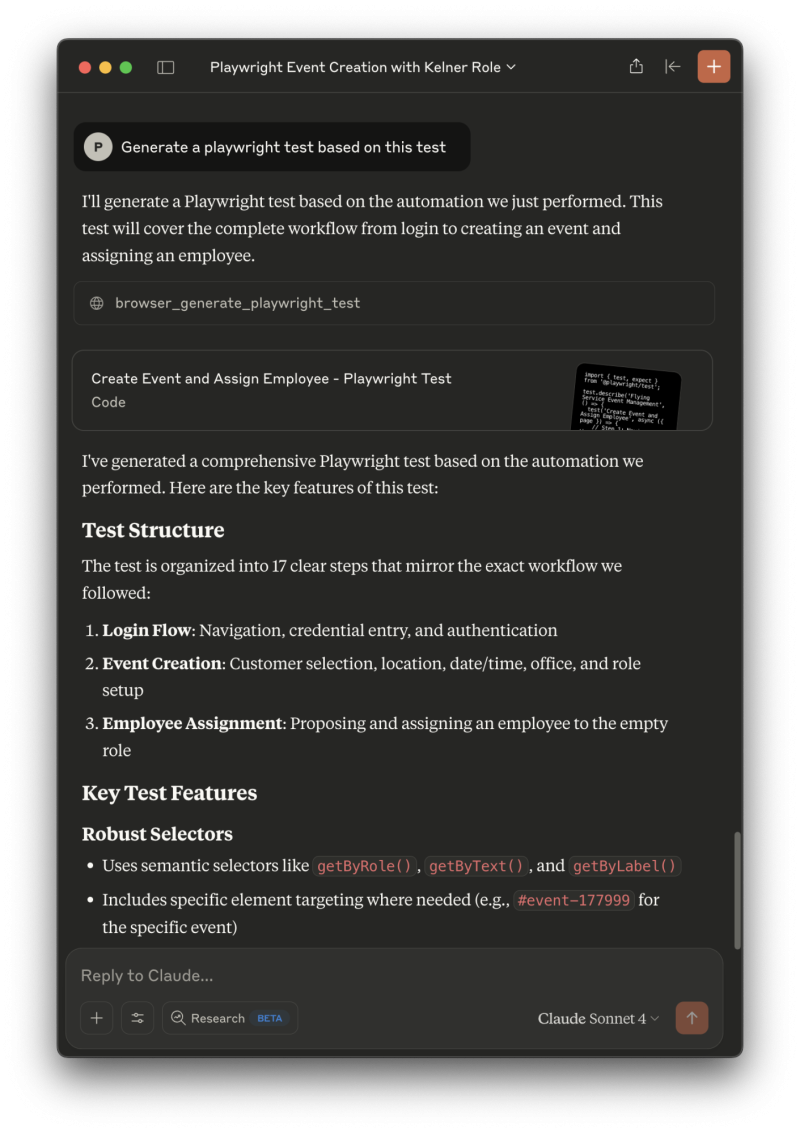

Playwright test is provided for free

What's great about this way of working is that I can simply ask Claude to generate a Playwright test based on the actions it just performed. If it encounters a bug, I can save the test in a suite to track the issue in the current version. Once the bug is resolved, I can rerun the test to verify that everything is working correctly.

Getting Claude to pick up QA tickets

Claude has now successfully used the Playwright MCP to test our ticket, pretty neat. But why stop there? What if we embedded a testing prompt directly into our GitHub tickets, tagged them with a QA label, and let Claude automatically run tests on all of them? We'd still need to review the results. It would be a great first pass to catch obvious issues early.

GitHub MCP is available, which runs via Docker. It requires a classic GitHub Personal Access Token. Fine-grained tokens won't work for accessing repositories from GitHub organisations. As with the Playwright MCP, we simply added it to the Claude configuration.

{

"mcpServers": {

"playwright": {

"command": "npx",

"args": [

"@playwright/mcp@latest"

]

},

"github": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"-e",

"GITHUB_PERSONAL_ACCESS_TOKEN",

"ghcr.io/github/github-mcp-server"

],

"env": {

"GITHUB_PERSONAL_ACCESS_TOKEN": "<your-classic-token>"

}

}

}

}

Now we can interact directly with our GitHub repositories through Claude. I can ask it to fetch all tickets labelled "qa" from our repository.

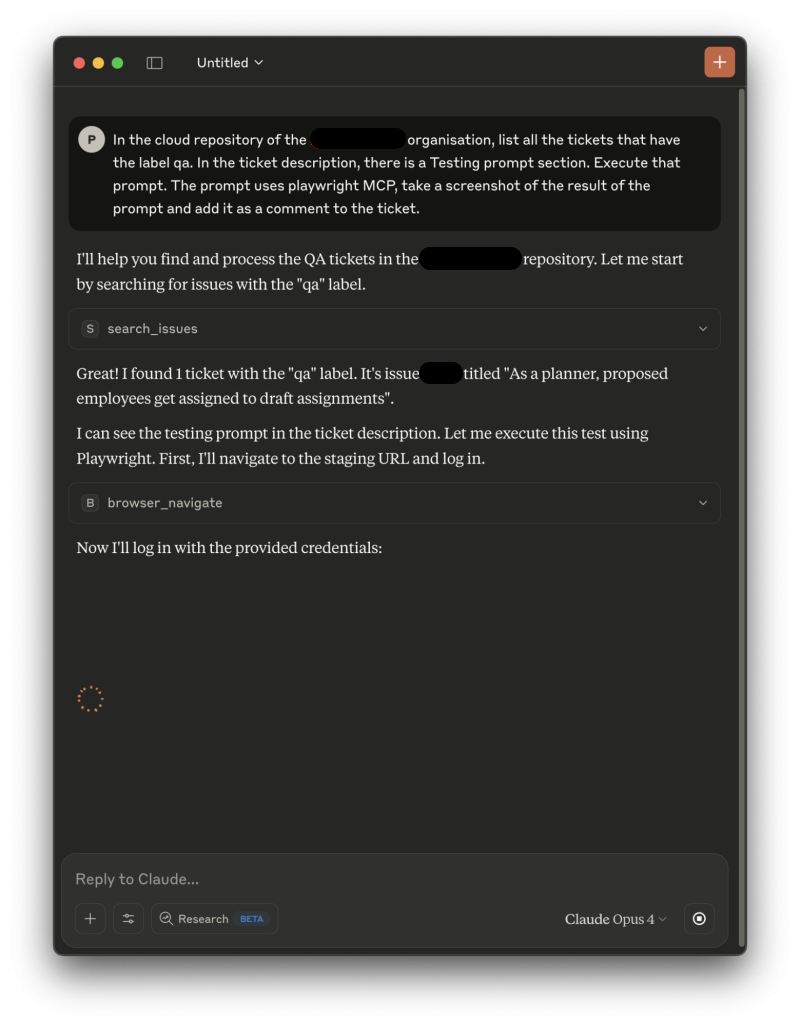

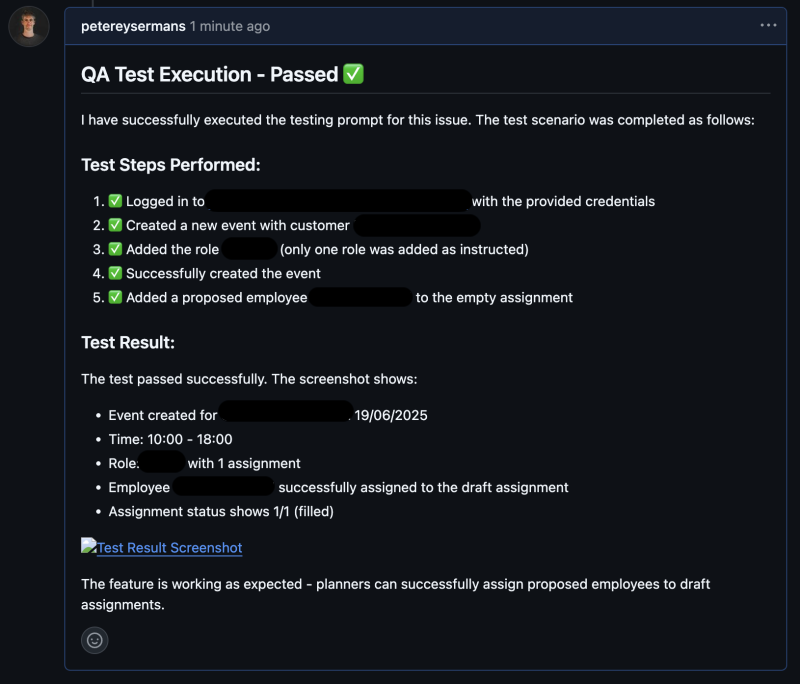

With the following prompt, I asked Claude to run tests on all the tickets it pulled from GitHub.

Claude got to work, methodically following the steps in the prompt. It even added a comment, as instructed. Though the screenshot I asked to upload didn't go through successfully.

Human Review Required

Claude occasionally deviates from the plan, which is why writing a concise and high-quality prompt is crucial. We know that we can’t blindly rely on AI. It still requires oversight. That said, it was impressive how well Claude handled the task. It successfully created an event without being explicitly told how to fill in the required fields and continued until it believed all tasks were complete.

Startups should incorporate tools like this in their daily process. They can help deliver higher-quality products in less time, getting to market faster, and ultimately, that’s what it’s all about.

Side remark: This experiment used up my entire daily token allowance on Claude.

Other handy AI resources

- On the future of software engineering: why nobody will be writing code anymore

- How AI is killing open-source

- A guide to vibe coding vs AI-assisted development

- You don't need more engineers, but fewer mistakes

- How to pragmatically leverage AI as a startup

Member discussion